Stop right there! If you think SASE is just another expensive security buzzword that only tech giants can afford, you’ve been fed some serious lies. I’m about to destroy five myths that are keeping your organization stuck in the stone age of network security, and by the end of this video, you’ll know exactly why everyone from Fortune 500 companies to small businesses are ditching their old security models for this game-changing approach.

Did you know that 90% of IT decision-makers completely misunderstand what SASE actually is? I’ve been hearing the same myths repeated over and over again, and honestly, it’s costing organizations millions in security breaches and wasted resources. Today, I’m debunking the five biggest lies about Secure Access Service Edge that the security industry doesn’t want you to know.

Before we dive in, I want to take a moment to say thank you for being here – your support fuels everything we do. If this video helps you see SASE in a new light, smash that subscribe button, hit like to spread these insights, and share this with one person who needs to hear it. Now, let’s dismantle these myths together…

SASE Implementation Roadmap

A successful SASE implementation requires careful planning and execution. Organizations should follow a structured approach that addresses both technical and organizational considerations to ensure a smooth transition to this new security and networking paradigm.

Assessment and Planning Phase

Before diving into implementation, organizations must conduct a thorough assessment of their current infrastructure and security needs. This involves identifying critical resources, applications, and data that require protection, as well as understanding how and by whom these assets are accessed. This initial evaluation helps establish a security framework that safeguards valuable assets while meeting compliance requirements.

Organizations should also define clear business objectives for their SASE implementation. Whether the goal is securing SD-WAN traffic, supporting remote work, enhancing zero-trust initiatives, or optimizing global connectivity, these objectives will guide subsequent implementation decisions. As Gartner recommends, engaging owners of strategic applications and workforce transformation teams during this phase ensures all requirements are properly addressed.

Stakeholder Identification and Engagement

A successful SASE deployment requires collaboration across multiple teams. Uniting security and network operations teams is particularly beneficial, as it ensures both security and performance aspects are considered. Appointing a neutral project leader can help ensure all requirements are effectively communicated and met.

Getting board approval is essential for network transformation projects of this scale. When presenting SASE to leadership, position it as a strategic enabler for IT responsiveness, business growth, and enhanced security. Articulate its long-term financial impact and ROI using real-world data and analyst insights to highlight tangible benefits.

Implementation Strategy Development

With assessment complete and stakeholders engaged, organizations should develop a detailed implementation roadmap that includes:

- Budget and resource planning to ensure adequate funding and personnel

- Vendor selection criteria based on integration capabilities with existing infrastructure

- A phased deployment approach to mitigate risks

- Policy development that aligns with security and compliance requirements

- User training and communication plans

Many organizations find success with a segmented implementation approach. Breaking the transition into smaller, manageable units limits strain on IT departments that must simultaneously maintain legacy systems. This approach also facilitates better resource management and allows for troubleshooting issues before they affect the entire organization.

Execution and Optimization

Implementation should begin with a pilot phase in a controlled environment. This allows teams to test configurations, identify potential issues, and make adjustments before wider deployment. Organizations should establish clear testing protocols and dedicate resources to troubleshooting, as SASE solutions must be thoroughly tested to avoid disruptions when deployed at scale.

As implementation progresses, continuous monitoring and optimization become essential. This includes:

- Troubleshooting configuration or deployment errors

- Testing security controls to ensure they’re functioning as expected

- Refining policies based on real-world performance

- Documenting lessons learned for future deployment phases

The complete SASE implementation typically spans 12-24 months, requiring patience and persistent focus on long-term objectives. By following this structured roadmap, organizations can successfully navigate the transition to SASE while minimizing disruption to business operations.

Hypervisor Security in SASE

Hypervisor security represents a critical but often overlooked component in the SASE architecture, particularly as organizations increasingly deploy virtualized security functions across distributed environments. As SASE combines network and security functions into a unified service delivered from the edge, the hypervisor layer becomes a potential attack vector that requires specialized protection strategies.

The Hypervisor Vulnerability Landscape

In multi-tenant SASE environments, hypervisors manage the virtualization infrastructure that hosts security services for multiple customers. This creates an attractive target for attackers, as compromising a hypervisor could potentially expose sensitive data from numerous organizations simultaneously. When traffic flows through SASE services for security processing, it’s typically unencrypted within the SASE instance itself, creating a privacy concern if the underlying system is compromised.

The attack surface expands significantly as SASE providers operate across:

- Multiple public cloud providers in different countries

- Various operating systems (different Linux distributions, Windows, macOS)

- Diverse virtualization technologies (VMs, Kubernetes clusters, containers)

This diversity increases the vulnerability landscape, as attackers need only find a weakness in one component to potentially gain access to the entire system.

Confidential Computing as a Mitigation Strategy

To address these vulnerabilities, leading SASE implementations are incorporating confidential computing technologies. This approach adds a critical layer of isolation by encrypting memory content during use, preventing unauthorized access even if an attacker gains root access to the infrastructure.

Confidential computing creates protected trust domains (TDs) for SASE components:

- Each POD or process operates within its own encrypted memory space

- The processor handles encryption/decryption as data moves between memory and processing

- Memory contents remain inaccessible even to users with root privileges

This architecture is particularly valuable for protecting sensitive operations like AI-powered security functions. For instance, when GenAI firewalls use AI models to classify content or detect jailbreak attempts, confidential computing ensures this analysis happens in a protected environment.

Architectural Considerations for Secure SASE Hypervisors

Implementing hypervisor security in SASE requires a thoughtful architectural approach. Many SASE providers deploy their services using containerized microservices orchestrated by Kubernetes, which introduces specific security considerations at the hypervisor level.

A robust SASE implementation with strong hypervisor security typically includes:

- Confidential containers that isolate each security function

- Attestation services that verify the integrity of the execution environment

- Secure service chaining that maintains protection as traffic flows between security functions

- Integration with specialized security offerings through a robust hypervisor

When evaluating SASE solutions, organizations should assess whether providers offer these hypervisor-level protections, particularly for accessing critical systems like remote desktops, hypervisor-installed servers, and other sensitive infrastructure.

The Role of AI in Hypervisor Security

As SASE architectures evolve, AI integration is becoming increasingly important for hypervisor security. AI-powered security functions can detect anomalous behavior that might indicate a hypervisor-level compromise, while instruction-level accelerators like Intel AMX can perform AI inferencing directly on the CPU rather than sending data to external GPUs.

This approach offers two significant advantages:

- Enhanced security by keeping sensitive data within the confidential computing boundary

- Reduced cost compared to GPU-based acceleration

The integration of AI with hypervisor security represents a future-ready approach to SASE implementation, particularly as threats continue to evolve in sophistication.

By addressing hypervisor security concerns, organizations can fully realize the benefits of SASE while maintaining robust protection for their most sensitive data and applications, regardless of where users access them from.

Cloud-Native Security Controls

The cloud-native architecture of SASE represents a fundamental shift in how security controls are designed, deployed, and managed across distributed environments. Unlike traditional security approaches that rely on hardware appliances or on-premises solutions, SASE’s cloud-native security controls provide consistent protection regardless of where users, data, or applications reside.

Core Cloud-Native Security Components

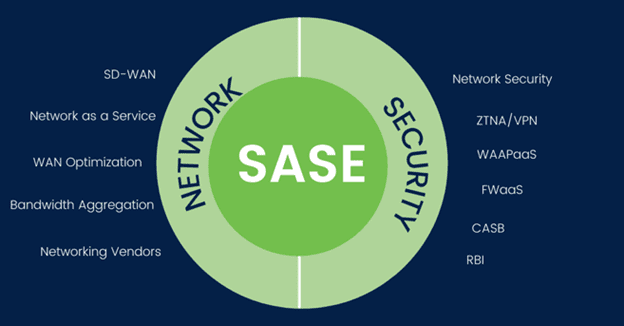

SASE integrates several essential cloud-native security functions that work together to create a comprehensive security posture:

- Secure Web Gateway (SWG) acts as a protective barrier between users and the internet, filtering web traffic to block malicious content and prevent access to risky websites. This cloud-delivered capability protects users from phishing attacks and malware downloads regardless of their location.

- Cloud Access Security Broker (CASB) provides visibility and control over cloud applications, enforcing security policies and preventing data loss. This component is crucial for organizations leveraging multiple SaaS applications, as it maintains consistent security across diverse cloud services.

- Zero Trust Network Access (ZTNA) replaces traditional VPNs by enforcing strict access controls based on user identity and context. The cloud-native implementation continuously verifies users and devices before granting access to resources, significantly reducing the risk of unauthorized access.

- Firewall as a Service (FWaaS) delivers enterprise-grade security policies through the cloud, protecting remote and branch locations without requiring local hardware. This allows organizations to extend consistent security policies to all locations without managing physical appliances.

Dynamic Policy Enforcement

A key advantage of cloud-native security controls in SASE is their ability to adapt to changing conditions. In hybrid cloud environments where workloads and data migrate between on-premises infrastructure and public cloud platforms, SASE enables dynamic security policy enforcement that follows the data.

This cloud-native approach ensures that security policies are consistently applied regardless of where resources are hosted—whether in private data centers or public clouds. The policies adapt automatically to protect against threats and maintain compliance with regulatory requirements as workloads move across environments.

Integration with Native Cloud Services

Cloud-native SASE solutions leverage and extend native cloud security controls rather than replacing them. This integration allows organizations to:

- Take advantage of cloud providers’ built-in security capabilities

- Extend these capabilities with additional SASE functions

- Maintain consistent security across multi-cloud environments

This approach ensures that organizations can fully utilize the scalability and flexibility of cloud computing while maintaining a strong security posture across all environments.

Reduced Risk Through Cloud-Native Design

SASE’s cloud-native architecture addresses the unique challenges of securing distributed users and applications. By defining security—including threat protection and data loss prevention—as a core part of the connectivity model, SASE ensures all connections are inspected and secured regardless of location, application, or encryption status.

This design significantly reduces risk by eliminating the attack surface and preventing lateral movement within the network. For example, when a remote user accesses a cloud application, SASE’s cloud-native controls verify the user’s identity, check the device’s security posture, scan for malware, and enforce data protection policies—all through cloud-delivered services that don’t require traffic to backhaul through a corporate data center.

Scalability and Resilience

The cloud-native architecture of SASE security controls provides inherent advantages in scalability and resilience. Unlike traditional security solutions that require hardware upgrades to handle increased traffic, cloud-native controls can automatically scale to accommodate changing demands.

This elasticity ensures that security functions maintain performance even during traffic spikes or when supporting growing numbers of remote users. Additionally, the distributed nature of cloud services provides built-in redundancy, reducing the risk of security failures due to hardware issues or regional outages.

By embracing cloud-native security controls within the SASE framework, organizations can achieve consistent, scalable protection across their increasingly complex and distributed environments, effectively securing the modern workplace without compromising user experience or application performance.

Edge Computing Compatibility Challenges

Infrastructure and Integration Challenges

The convergence of SASE with edge computing introduces significant infrastructure and integration challenges that organizations must navigate. These challenges stem from the complex nature of modern network architectures, the incorporation of cloud-based resources, and the necessity to align IT processes with security service edge requirements.

One primary challenge is the seamless integration between on-premises infrastructure and cloud environments, which requires striking a delicate balance between security and accessibility. Organizations implementing SASE and edge computing face difficulties when traditional network security functions and WAN capabilities—historically managed by separate teams using distinct tools—must be unified under a single model. This integration demands changes to established processes, which can be particularly disruptive to organizational workflows.

Limited expertise compounds these challenges, as SASE remains a relatively new concept that many organizations lack the in-house knowledge to implement effectively. This expertise gap is especially pronounced when dealing with edge computing’s distributed nature, which introduces additional complexity requiring robust connectivity solutions to ensure operational fluidity.

Change Management and Organizational Alignment

Implementing a SASE solution alongside edge computing involves significant changes to traditional infrastructure that may have become entrenched within corporate working practices. This transition can compromise productivity and collaboration while potentially creating security gaps until the new setup is fully operational.

A critical challenge is fostering cooperation between networking and security professionals. Transitioning to SASE should be a joint effort that considers both network and security functions, yet security teams often control the deployment process with network technicians factored in as an afterthought. This siloed approach can lead to suboptimal implementations that fail to address the unique requirements of edge computing environments.

Change management becomes essential, with organizations needing to set clear milestones and ensure stakeholders remain informed throughout the implementation process. Without careful management of this transition, organizations risk disruption to critical business operations and potential resistance from affected teams.

Vendor Selection and Interoperability

The quality of SASE products varies significantly across the market. Legacy security providers may lack expertise in cloud-native technologies, while other solutions may be overly complex or poorly supported, resulting in configuration issues that compromise performance. Some SASE vendors excel at network performance but provide inadequate security threat monitoring capabilities.

Interoperability presents another significant challenge, as SASE combines multiple functions that may cause integration issues when deployed across edge computing environments. Few vendors currently provide truly comprehensive SASE offerings, requiring organizations to critically evaluate potential partners to ensure they deliver reliable and effective components that work seamlessly with edge computing infrastructure.

Scalability and Cost Considerations

As edge computing environments grow, managing and scaling these networks efficiently becomes increasingly difficult. The distributed nature of edge computing introduces challenges in optimizing data flow across hybrid environments while maintaining stringent security protocols. Organizations must carefully consider the costs associated with upgrading or transitioning to these new models, factoring in hardware, software, and training expenses.

Scalability is particularly crucial in edge computing environments, where the ability to flexibly expand or contract services based on demand is essential in today’s dynamic business landscape. The rapid expansion of edge computing necessitates security solutions that can scale alongside network growth, a requirement that traditional safety measures—often designed for more centralized systems—struggle to meet.

Data Sovereignty and Regulatory Compliance

Edge computing introduces data sovereignty challenges, where data must be processed and stored according to the legal and regulatory requirements of the region in which it is collected. This creates complications in managing data across different jurisdictions, requiring careful planning and compliance with various standards.

SASE implementations may face challenges in meeting specific regulatory compliance standards, particularly related to data localization requirements. Organizations must ensure their SASE and edge computing deployments adhere to these regulations, which can vary significantly across different regions and industries.

By understanding and addressing these compatibility challenges, organizations can more effectively implement SASE in edge computing environments, creating secure, efficient, and compliant infrastructures that support modern distributed workforces and applications.

Implementation Hurdles Ahead

Integration with Existing Infrastructure

One of the most significant challenges organizations face when implementing SASE is integration with existing infrastructure and systems. Nearly half of technical executives and IT security practitioners worldwide identified this as their primary obstacle in 2024. Legacy systems, which many enterprises have invested in heavily over decades, often lack native compatibility with cloud-based SASE components, creating complex integration scenarios.

This integration challenge manifests in several ways:

- Existing security appliances may not easily connect to cloud-based security services

- On-premises applications might require special configurations to work with SASE’s zero-trust model

- Custom-built systems may lack APIs necessary for seamless integration

Organizations attempting DIY solutions by cobbling together disjointed sets of single-purpose appliances or services typically end up with undesirable outcomes, including complex infrastructure, high latency, and unpredictable attack surfaces.

Policy Management Complexity

Over 40% of organizations report that complexity in policy management across environments represents a major SASE implementation challenge. This complexity stems from the need to maintain consistent security policies across diverse environments—from branch offices to remote workers and cloud resources.

SASE requires organizations to:

- Translate existing network and security policies into a cloud-native framework

- Ensure consistent policy enforcement regardless of user location or device

- Maintain compliance with regulations that may vary by region or industry

As one network security architect at a Fortune 500 cybersecurity company explained: “Things have changed drastically since we implemented SASE. Users are proxied through these [SASE PoPs], and that makes troubleshooting a little different. It’s hard because we’re no longer looking at things from the laptop to the application. It’s really about looking things from the SASE node to wherever your user is going.”

Balancing Security and Performance

A fundamental challenge in SASE implementation is balancing robust security with network performance. As organizations layer in more security controls, they often experience performance degradation that impacts user experience and productivity. This creates a difficult trade-off between comprehensive protection and operational efficiency.

Dell’Oro’s 2024 White Paper highlights this issue as a primary challenge: “Over emphasis on security at the expense of networking – we all agree that security is critical; it is non-negotiable. However, performance is as well, and many companies find it hard to balance both.”

A successful SASE solution requires a high-performance SD-WAN foundation before layering in security functions. Without this foundation, organizations risk creating bottlenecks that undermine the benefits of their SASE implementation.

Visibility and Monitoring Challenges

Organizations implementing SASE often struggle with visibility into their network traffic and performance. According to Enterprise Management Associates (EMA) research, monitoring the health and performance of SASE Points of Presence (PoPs) ranks as the second-biggest management challenge.

Specific visibility challenges include:

- Difficulty gaining insights into traffic between SASE PoPs and cloud infrastructure

- Limited visibility into encrypted traffic, which constitutes an increasing percentage of network communications

- Changed troubleshooting paradigms that require new monitoring approaches

These visibility gaps can lead to prolonged troubleshooting times, difficulty in optimizing network paths, and challenges in maintaining consistent performance across the SASE environment.

Skills Gap and Change Management

The relatively new nature of SASE creates a significant skills gap within many organizations. Most IT professionals have years of experience managing on-premises operations but lack expertise in hybrid cloud environments. This skills shortage can slow implementation and create security vulnerabilities if configurations are improperly implemented.

Organizations must conduct thorough skill assessments of their existing network and security teams to determine if they can effectively implement and manage SASE. Many find it necessary to:

- Conduct training sessions and workshops to build internal expertise

- Consider engaging managed service providers (MSPs) with specialized SASE knowledge

- Create cross-functional teams that combine networking and security expertise

Without addressing these skills gaps, organizations risk incomplete implementations that fail to deliver the full benefits of SASE.

Looking Forward: The Evolution of SASE

Despite these challenges, SASE adoption continues to accelerate. Gartner predicted that by 2024, at least 40% of enterprises would have targeted strategies to adopt SASE, a dramatic increase from just 1% in 2018. This growth reflects the compelling value proposition SASE offers for securing today’s distributed workforces and applications.

As SASE evolves, several trends are emerging that will shape its future development:

- Increased convergence with edge computing to improve performance and reduce latency

- Greater adoption of Zero Trust Architecture (ZTA) principles within SASE frameworks

- Expansion of multi-cloud and hybrid deployment models to provide greater flexibility

Organizations that successfully navigate the implementation challenges of SASE will be well-positioned to create secure, efficient network architectures that support modern work patterns while maintaining robust protection against evolving threats.